Binary Perceptron Classifier

What Is A Binary Perceptron?

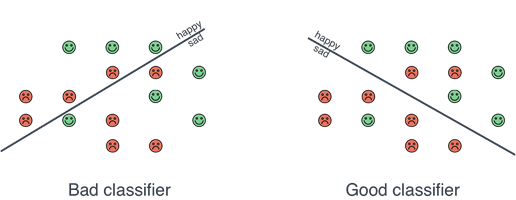

The binary perceptron algorithm is a machine learning algorithm that classifies data into two groups. It assumes that the data points are linearly separable (a line can separate all the data into its two classes with no training error), therefore its accuracy is highly dependent on this.

Each of the inputs (i.e. features) of the data is assigned a weight (for our algorithm this is initially assigned as 0) and one bias is assigned to the data as a whole (for our algorithm this is initially assigned as 0). And activation score is then calculated by summing the product of the weights and the feature inputs and adding the bias. To check if the classification is correct, the target (either 1 or -1) is multiplied by the activation score. If the result of this multiplication is positive, the classification is correct. If it is negative, the classification is wrong because:

If a negative class (-1) is correctly predicted (-1) multiplying the predicted by the actual results in a positive (-1 * -1 = +1). A Correctly predicted positive will also always be posoitive (+1 * +1 = +1). A wrong prediction will always result in (-1 * +1) or (+1 * -1) which will both always be negative.

So, all wrong predictions will always result in a negative product as will need adjustment. The weights are made bigger by adding them to the product of the prediction value and the feature input value to bring it closer to being positive. The bias is also adjusted by adding the prediction value to it and the loop begins again.

When the maximum number of iterations is reached, an adjusted bias and weights are returned which should be more accurate. These will then be used when attempting to predict the outputs.

This project will initially classify on a 1 vs 1 basis before moving onto a 1 vs rest example.

Pseudocode

Import necessary libraries

Load the files with the training and test data OR split the data into a training and test set

Create a function to train the Perceptron algorithm with inputs as Training Data & Maximum iterations i.e. PerceptronTrain(Training Data: td, MaxIterations)

Weights initialised as 0 for all elements of td i.e. (wi = 0 for all i = 1, ….td;)

The initial bias is 0 i.e b = 0

Begin algorithm FOR iteration 1 …. MaxIterations do performs algorithm for MaxIerations times

For all (x̄, y) ∈ td do: for all features x̄ and predictions y perform the following

A = W̅T * X + b Activation score is the weights times the features plus the bias

If y*A <= 0 then if the activation is below 0 W̅ and b need updating

wi = wi + y ⋅ xi for all i = 1,…, td weights updated by adding y times features

b = b + y new bias by adding y to the bias

return b, w1, w2 …, wd This returns the updated weights and biases

Code:

Results:

Using unshuffled data, the accuracy when testing the perceptron against the train and test data is as follows:

Class-1 vs Class-2:

Test data: - 100%

Train data: - 100%

Class-1 vs Class-3:

Test data: - 100%

Train data: - 100%

Class-2 vs Class-3:

Test data: - 50%

Train data: - 50%

The pair of classes that is most difficult to separate is class 2 and class 3 as they were not linearly separable upon closer inspection.

For 1 vs rest the accuracies:

Test data: - 66.67%

Train data: - 66.67%